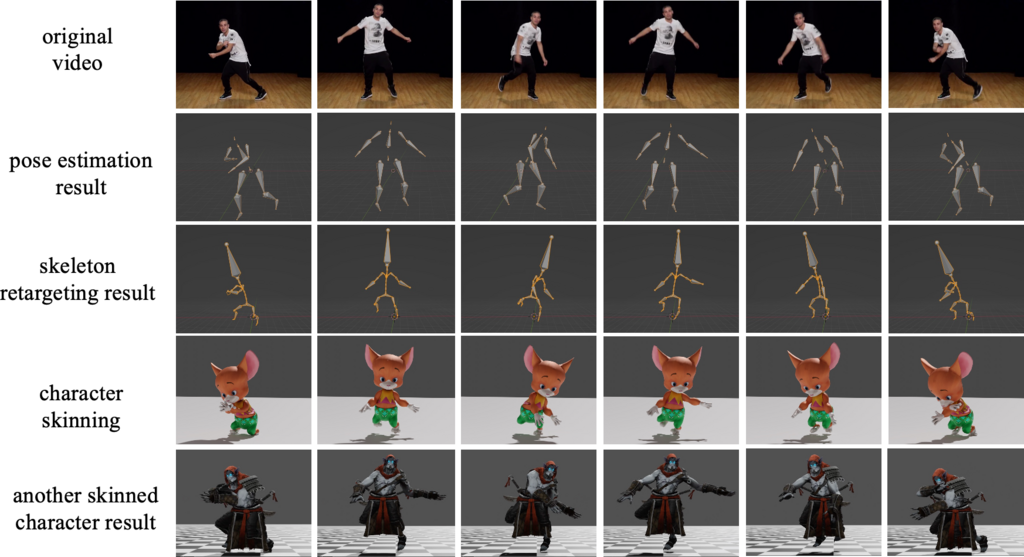

We introduce a motion retargeting framework capable of animating characters with distinct skeletal structures using video data. While prior studies have successfully performed motion retargeting be- tween skeletons with different structures, retargeting noisy and unnatural motion data extracted from monocular videos has proved challenging. Addressing this issue, our approach proposes a deep learning framework, retargeting motion data procured from easily accessible monocular videos, to animate characters with diverse skeletal structures. Our approach is aimed at providing support for individual creators in character animation.

Our proposed framework pre-processes motion data derived from multiple monocular videos by two-stage pose estimation, using this as the training dataset for Skeleton-Aware Motion Retargeting Network (SAMRN). In addition, we introduce a loss function for the rotation angle of the character’s root node to address the rotation issue inherent in SAMRN. Furthermore, by incorporating motion data extracted from videos and adding a loss function for the character’s root node and end-effector’s velocities, the proposed method makes it possible to generate natural motion data that is closely aligned with the source video. We demonstrate the effectiveness of the proposed framework for motion retargeting between monocular videos and various characters through both qualitative and quantitative evaluations.

Presentation Video

Paper

-

Xin Huang, Takashi Kanai: “Video-Based Motion Retargeting Framework between Characters with Various Skeleton Structure”, 16th ACM SIGGRAPH Conference on Motion, Interaction and Games (MIG 2023), Article No.24, pp.1-6, 2023.

Paper (author’s version)

Paper (publisher’s version)

Supplemental Video (Youtube)