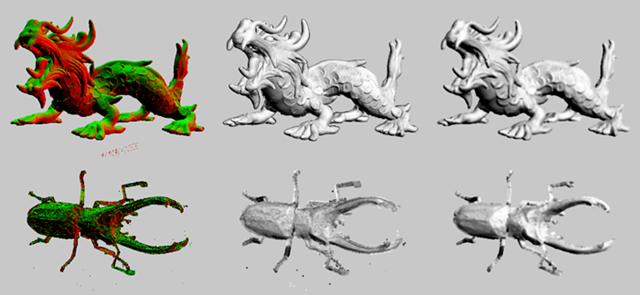

In this paper, we propose a method for directly rendering point sets which only have positional information by using recent graphics processors (GPUs). Almost all the algorithms in our method are processed on GPU. Our point-based rendering algorithms apply an image buffer which has lower-resolution image than a frame buffer. Normal vectors are computed and various types of noises are reduced on such an image buffer. Our approach then produces high-quality images even for noisy point clouds especially acquired by 3D scanning devices. Our approach also uses splats in the actual rendering process. However, the number of points to be rendered in our method is in general less than the number of input points due to the use of selected points on an image buffer, which allows our approach to be processed faster than the previous approaches of GPU-based point rendering.

Papers

- Hiroaki Kawata, Takashi Kanai: “Direct Point Rendering on GPU”, Proc. International Symposium on Visual Computing (ISVC) 2005 (Lake Tahoe, Nevada, U.S.A., 5-7 December 2005), Lecture Notes in Computer Science Vol. 3804, pp. 587-594, Springer-Verlag, Heidelberg, 2005. [paper (Adobe PDF) (681KB)]