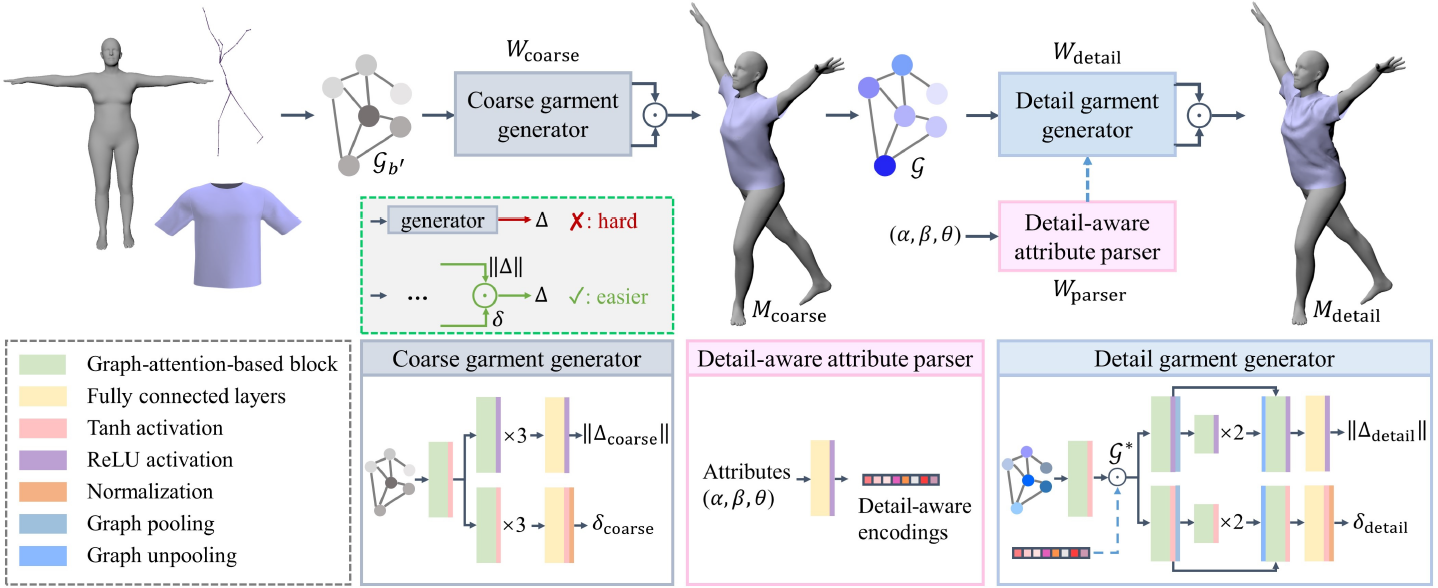

This paper presents a novel learning-based clothing deformation method to generate rich and reasonable detailed deformations for garments worn by bodies of various shapes in various animations. In contrast to existing learning-based methods, which require numerous trained models for different garment topologies or poses and are unable to easily realize rich details, we use a unified framework to produce high fidelity deformations efficiently and easily. Specifically, we first found that the fit between the garment and the body has an important impact on the degree of folds. We then designed an attribute parser to generate detail-aware encodings and infused them into the graph neural network, therefore enhancing the discrimination of details under diverse attributes. Furthermore, to achieve better convergence and avoid overly smooth deformations, we proposed to reconstruct output to mitigate the complexity of the learning task. Experimental results show that our proposed deformation method achieves better performance over existing methods in terms of generalization ability and quality of details.

Video

Papers

-

Tianxing Li, Rui Shi, Takashi Kanai: “Detail-Aware Deep Clothing Animations Infused with Multi-Source Attributes”, Computer Graphics Forum, 42(1), pp.231-244, 2023 (presented at Eurographics 2023).

[Paper(author’s version)][Paper (publisher’s version)][Arxiv paper (draft version)]