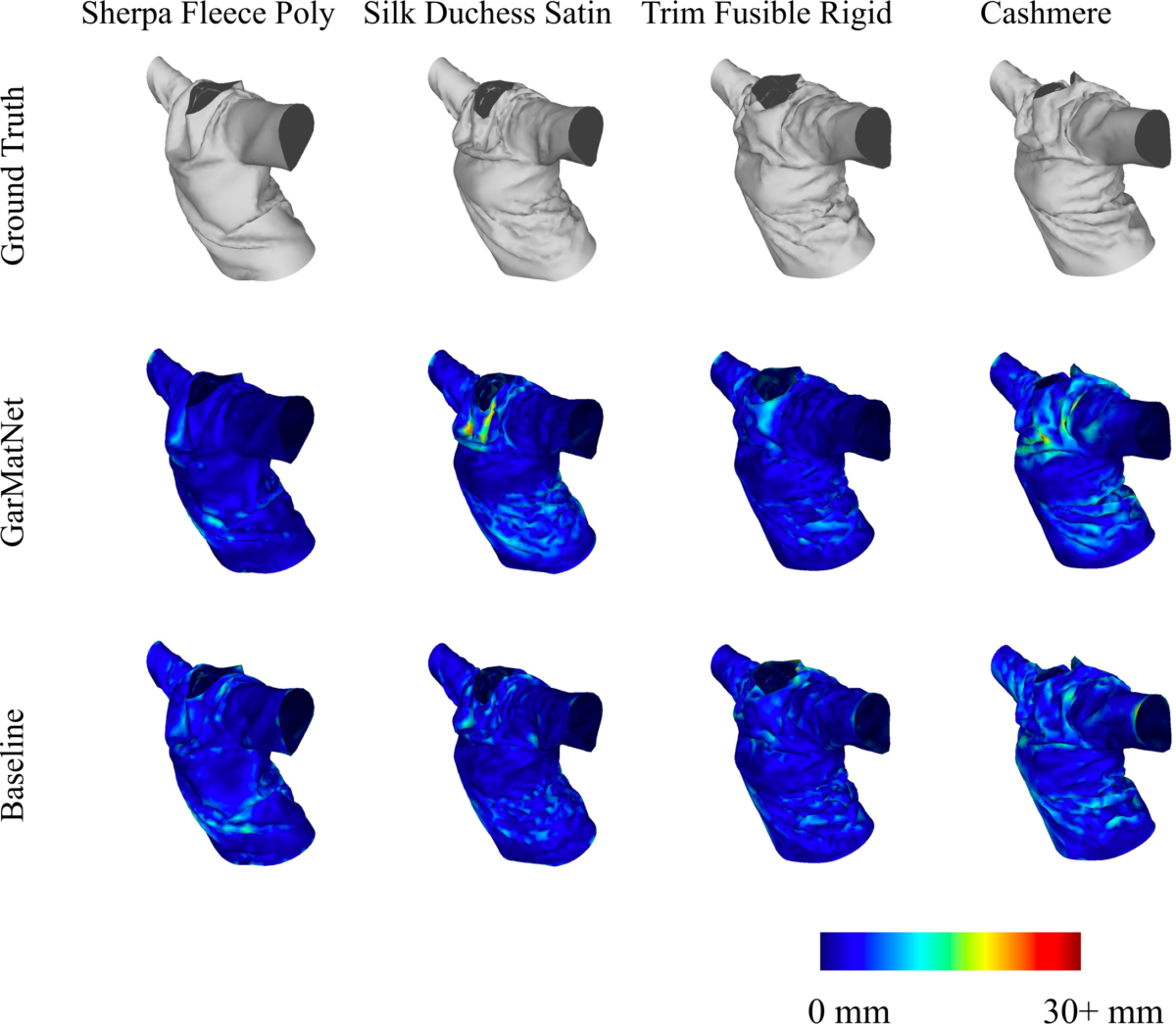

Recent progress in learning-based methods of garment mesh generation is resulting in increased efficiency and maintenance of reality during the generation process. However, none of the previous works so far have focused on variations in material types based on a parameterized material parameter under static poses. In this work, we propose a learning-based method, GarMatNet, for predicting garment deformation based on the functions of human poses and garment materials while maintaining detailed garment wrinkles. GarMatNet consists of two components: a generally-fitting network for predicting smoothed garment mesh and a locally-detailed network for adding detailed wrinkles based on smoothed garment mesh. We hypothesize that material properties play an essential role in the deformation of garments. Since the influences of material type are relatively smaller than pose or body shape, we employ linear interpolation among different factors to control deformation. More specifically, we apply a parameterized material space based on the mass-spring model to express the difference between materials and construct a suitable network structure with weight adjustment between material properties and poses. The experimental results demonstrate that GarMatNet is comparable to the physically-based simulation (PBS) prediction and offers advantages regarding generalization ability, model size, and training time over the baseline model.

Video

Papers

- Zen Luo, Tianxing Li, Takashi Kanai: “GarMatNet: A Learning-based Method for Predicting 3D Garment Mesh with Parameterized Materials”, 14th ACM SIGGRAPH Conference on Motion, Interaction, and Games (MIG 2021), Article No.4, pp.1-10, 2021.

[ Paper (author’s version)][Paper (publisher’s version)]