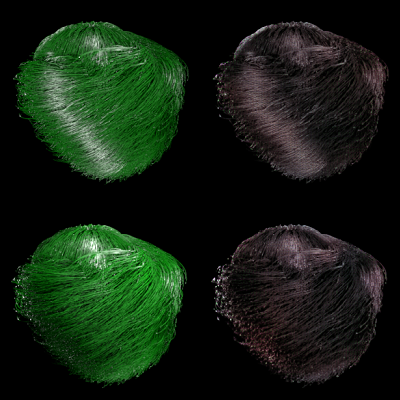

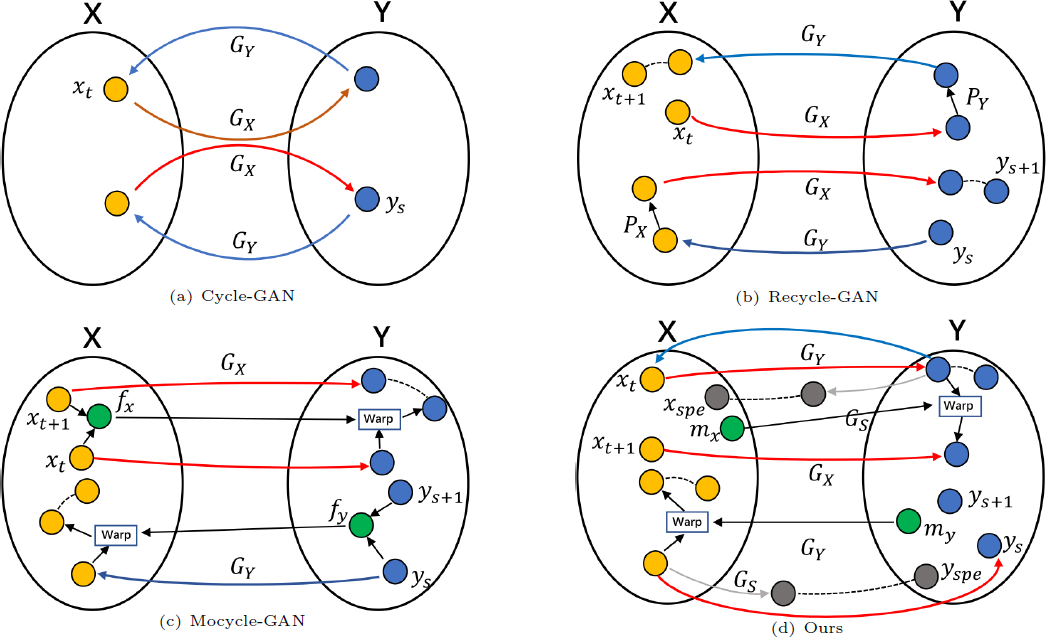

We introduce a GAN-based model for shading photorealistic hair animations. This work try to shade photorealistic hairs by extending the unsupervised Generative Adversarial Networks. Also, our model is much faster than the previous onerous rendering algorithms and produces fewer artifacts than other neural image translation methods. The main idea is to extend Cycle-GAN structure to reverse the vast majority of semi-transparent hair appearances and exactly produces the interaction with lights of the scene. We construct two constraints to keep the temporal coherence and highlight stable. Our approach outperforms and computationally more efficient than previous methods.

Presentation Video

Papers

- Zhi Qiao, Takashi Kanai: “A GAN-based Temporally Stable Shading Model for Fast Animation of Photorealistic Hair”, Computational Visual Media, 7(1), pp.127–138, March 2021.

[Paper (publisher’s version)] - Zhi Qiao, Takashi Kanai: “An Energy-Conserving Hair Shading Model Based on Neural Style Transfer”, Proc. Pacific Graphics 2020, short paper, pp.1-6, 2020.

[Paper (author’s version)][Paper (publisher’s version)]